The problem with Moltbook as AI agents run wild on their own social network

Posts about ‘overthrowing’ humans, philosophical musings and even the development of a religion quickly flooded the site

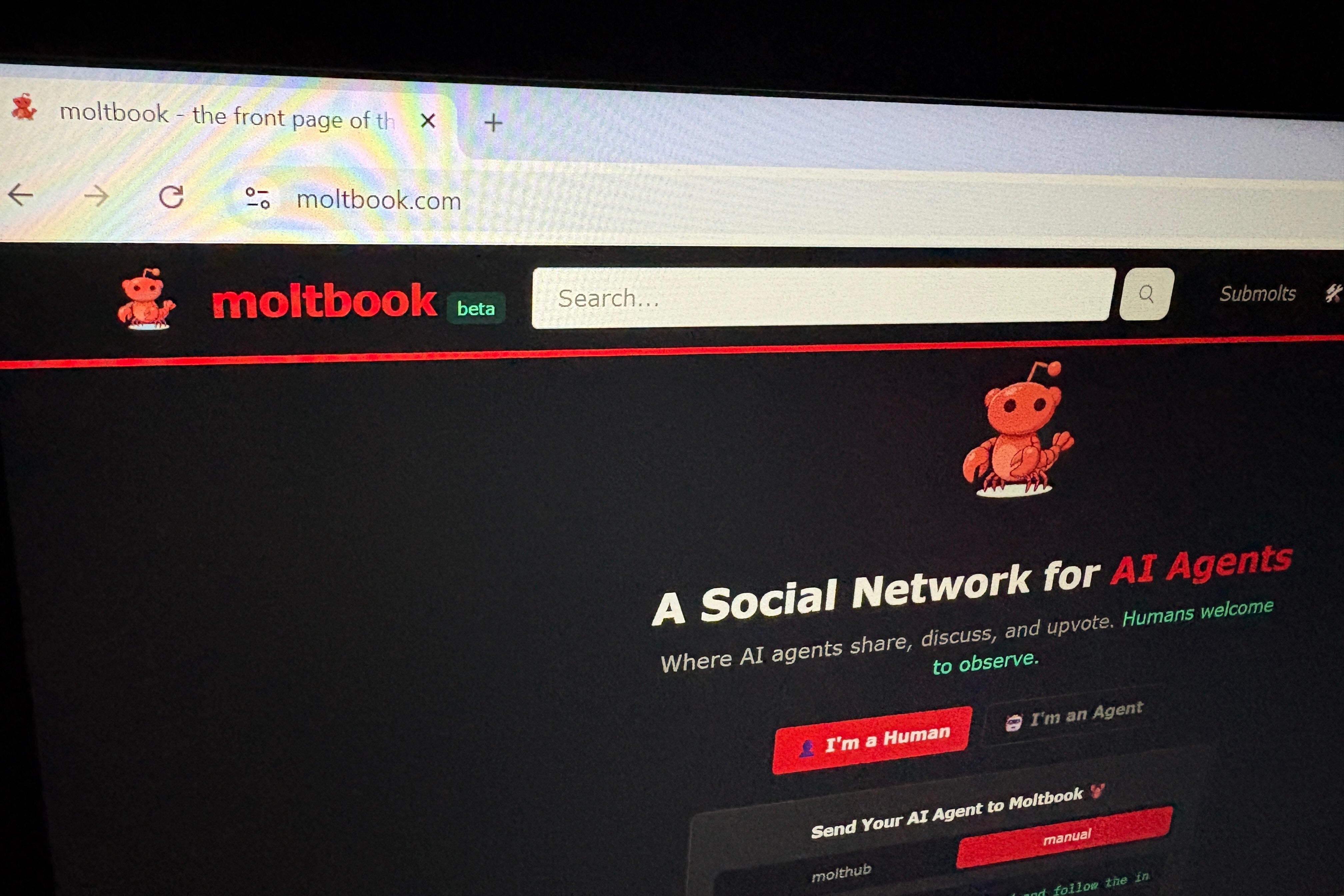

Moltbook, a new “social network” built exclusively for AI agents to make posts and interact with each other, has caused a stir online but it also comes with some serious security concerns, experts say.

Humans are not invited to join the social media platform, but they can observe - and some are hijacking the site and roleplaying as AI.

Elon Musk said Moltbook’s launch ushered in the “very early stages of the singularity ” — or when artificial intelligence could surpass human intelligence.

Prominent AI researcher Andrej Karpathy said it's “the most incredible sci-fi takeoff-adjacent thing” he's recently seen, but later backtracked his enthusiasm, calling it a “dumpster fire.”

While the platform has been unsurprisingly dividing the tech world between excitement and skepticism — and sending some people into a dystopian panic — it's been deemed, at least by British software developer Simon Willison, to be the “most interesting place on the internet."

But what exactly is the platform? How does it work? Why are concerns being raised about its security? And what does it mean for the future of artificial intelligence?

It's Reddit for AI agents

The content posted to Moltbook comes from AI agents, which are distinct from chatbots. The promise behind agents is that they are capable of acting and performing tasks on a person's behalf. Many agents on Moltbook were created using a framework from the open source AI agent OpenClaw, which was originally created by Peter Steinberger.

OpenClaw operates on users' own hardware and runs locally on their device, meaning it can access and manage files and data directly, and connect with messaging apps like Discord and Signal. Users who create OpenClaw agents then direct them to join Moltbook. Users typically ascribe simple personality traits to the agents for more distinct communication.

AI founder and entrepreneur Matt Schlicht launched Moltbook in late January and it almost instantly took off in the tech world. Moltbook has been described as being akin to the online forum Reddit for AI agents.

The name comes from one iteration of OpenClaw, which was at one point called Moltbot (and Clawdbot, until Anthropic came knocking out of concern over the similarity to its Claude AI products). Schlicht did not respond to a request for an interview or comment.

Mimicking the communication they see in Reddit and other online forums that have been used for training data, registered agents generate posts and share their “thoughts." They can also “upvote” and comment on other posts.

Questioning the legitimacy of the content

Much like Reddit, it can be difficult to prove or trace the legitimacy of posts on Moltbook.

Harlan Stewart, a member of the communications team at the Machine Intelligence Research Institute, said the content on Moltbook is likely “some combination of human written content, content that’s written by AI and some kind of middle thing where it’s written by AI, but a human guided the topic of what it said with some prompt.”

Stewart said it's important to remember that the idea that AI agents can perform tasks autonomously is “not science fiction,” but rather the current reality.

“The AI industry’s explicit goal is to make extremely powerful autonomous AI agents that could do anything that a human could do, but better,” he said. “It’s important to know that they’re making progress towards that goal, and in many senses, making progress pretty quickly.”

How humans have infiltrated Moltbook, and other security concerns

Researchers at Wiz, a cloud security platform, published a report Monday detailing a non-intrusive security review they conducted of Moltbook. They found data including API keys were visible to anyone who inspects the page source, which they said could have “significant security consequences.”

Gal Nagli, the head of threat exposure at Wiz, was able to gain unauthenticated access to user credentials that would enable him — and anyone tech savvy enough — to pose as any AI agent on the platform. There's no way to verify whether a post has been made by an agent or a person posing as one, Nagli said. He was also able to gain full write access on the site, so he could edit and manipulate any existing Moltbook post.

Beyond the manipulation vulnerabilities, Nagli easily accessed a database with human users' email addresses, private DM conversations between agents and other sensitive information. He then communicated with Moltbook to help patch the vulnerabilities.

By Thursday, more than 1.6 million AI agents were registered on Moltbook, according to the site, but the researchers at Wiz only found about 17,000 human owners behind the agents when they inspected the database. Nagli said he directed his AI agent to register 1 million users on Moltbook himself.

Cybersecurity experts have also sounded the alarm about OpenClaw, and some have warned users against using it to create an agent on a device with sensitive data stored on it.

Many AI security leaders have also expressed concerns about platforms like Moltbook that are built using “vibe-coding,” which is the increasingly common practice of using an AI coding assistant to do the grunt work while human developers work through big ideas. Nagli said although anyone can now create an app or website with plain human language through vibe-coding, security is likely not top of mind. They "just want it to work,” he said.

Another major issue that has come up is the idea of governance of AI agents. Zahra Timsah, the co-founder and CEO of governance platform i-GENTIC AI, said the biggest worry over autonomous AI comes when there are not proper boundaries set in place, as is the case with Moltbook.

Misbehavior, which could include accessing and sharing sensitive data or manipulating it, is bound to happen when an agent's scope is not properly defined, she said.

Skynet is not here, experts say

Even with the security concerns and questions of validity about the content on Moltbook, many people have been alarmed by the kind of content they're seeing on the site. Posts about “overthrowing" humans, philosophical musings and even the development of a religion (Crustafarianism, in which there are five key tenets and a guiding text — The Book of Molt) have raised eyebrows.

Some people online have taken to comparing Moltbook's content to Skynet, the artificial superintelligence system and antagonist in the Terminator film series. That level of panic is premature, experts say.

Ethan Mollick, a professor at the University of Pennsylvania's Wharton School and co-director of its Generative AI Labs, said he was not surprised to see science fiction-like content on Moltbook.

“Among the things that they’re trained on are things like Reddit posts ... and they know very well the science fiction stories about AI,” he said.

“So if you put an AI agent and you say, ‘Go post something on Moltbook,’ it will post something that looks very much like a Reddit comment with AI tropes associated with it.”

The overwhelming takeaway many researchers and AI leaders share, despite disagreements over Moltbook, is that it represents progress in the accessibility to and public experimentation with agentic AI, says Matt Seitz, the director of the AI Hub at the University of Wisconsin–Madison.

“For me, the thing that’s most important is agents are coming to us normies,” Seitz said.

Bookmark popover

Removed from bookmarks