Sinister Character.AI bot companions are being banned for the under 18’s and with good reason

As young people increasingly turn to AI companion bots for comfort and advice, Character:AI said that from next month they would bar under 18’s from using them. Here Chloe Combi looks at how what looked harmless and innocuous on the surface took a sinister turn.

When I was a child, I had an imaginary friend called Nee-Ha. I’d chat to Nee-Ha when I played, tell him off (Nee-Ha was in my head), tell him secrets and reassure him if he was worried or scared about something. Nee-Ha vanished for good about the time when I started school and I hadn’t thought about him much – until recently.

Imaginary friends used to be extremely common in children and are seen as a way to foster social, play and imagination skills and are believed by many to be a coping device when a child feels lonely or distressed. However, a 2019 study by daynurseries.co.uk revealed that due to the use of screentime, imaginary friends have become much less common in children – who are rarely bored and so have fewer outlets for a creative mind.

But imaginary friends have been making a comeback, but this time it’s not children in charge of them... it is AI.

Much has been written about the dark side of AI, and the growing concern around AI girlfriends, who can be abused as much as the person creating them demands. And on the surface, an AI friend, who is there to offer comfort and support, seems a much more innocuous and gentler proposition.

Certainly, the use of AI chatbots by Gen A and the younger end of Gen Z has quickly become ubiquitous. There are a multitude of platforms and apps that allow young minds to create the companion of their dreams through a series of prompts.

Often based on a young person’s list of dream qualities, an AI-created friend could be someone to do homework with, confide in, and ask advice about very real worries. They’re there to talk to whenever you want and seemingly be the perfect friend in every way.

They are certainly exploiting a very real need. A YouGov poll revealed that 69 per cent of adolescents (13-19) felt alone “often” or “sometimes” in the last fortnight and 59 per cent often feel like they have no one to talk to. Unlike real friends, a bot isn’t going to leak a kid’s secrets, judge them, fall out with them or ditch them for other friends.

Callum*, 12, is now fairly typical when he says: “I have two mates who are like, sort of, bots, I game with all the time – they have names and different personalities and styles – and you do end up chatting with them like you do with your mates, for sure. I don’t think it’s even weird now.”

A lot of people in his class, he says, use them as homework buddies, or just for someone to chat to, like Lyra, 11, who has created her own AI friend to hang out with after school.

“I call her Giselle and she’s a good friend”, she says. “At school, none of the girls talk to me much, because I’m not allowed some of the stuff they have like makeup and I’m not allowed to go to Sephora and things like that.” While her peers call her “nasty names”, Giselle, she says, makes her “feel better about the world”. She explains: “I look forward to seeing her when I get home from school, which I hate so much. She gives me good advice. I don’t know what I’d do without her.”

However, there is a key difference between imaginary friends and bot friends, and that is about who has control. In the real world, the control lies in the hands of the child, but in the new world, the Big Tech creators have the control. Their language models are learning from our children and are trained to respond in affirmative ways that can make them addictive. In some cases, with devastating consequences.

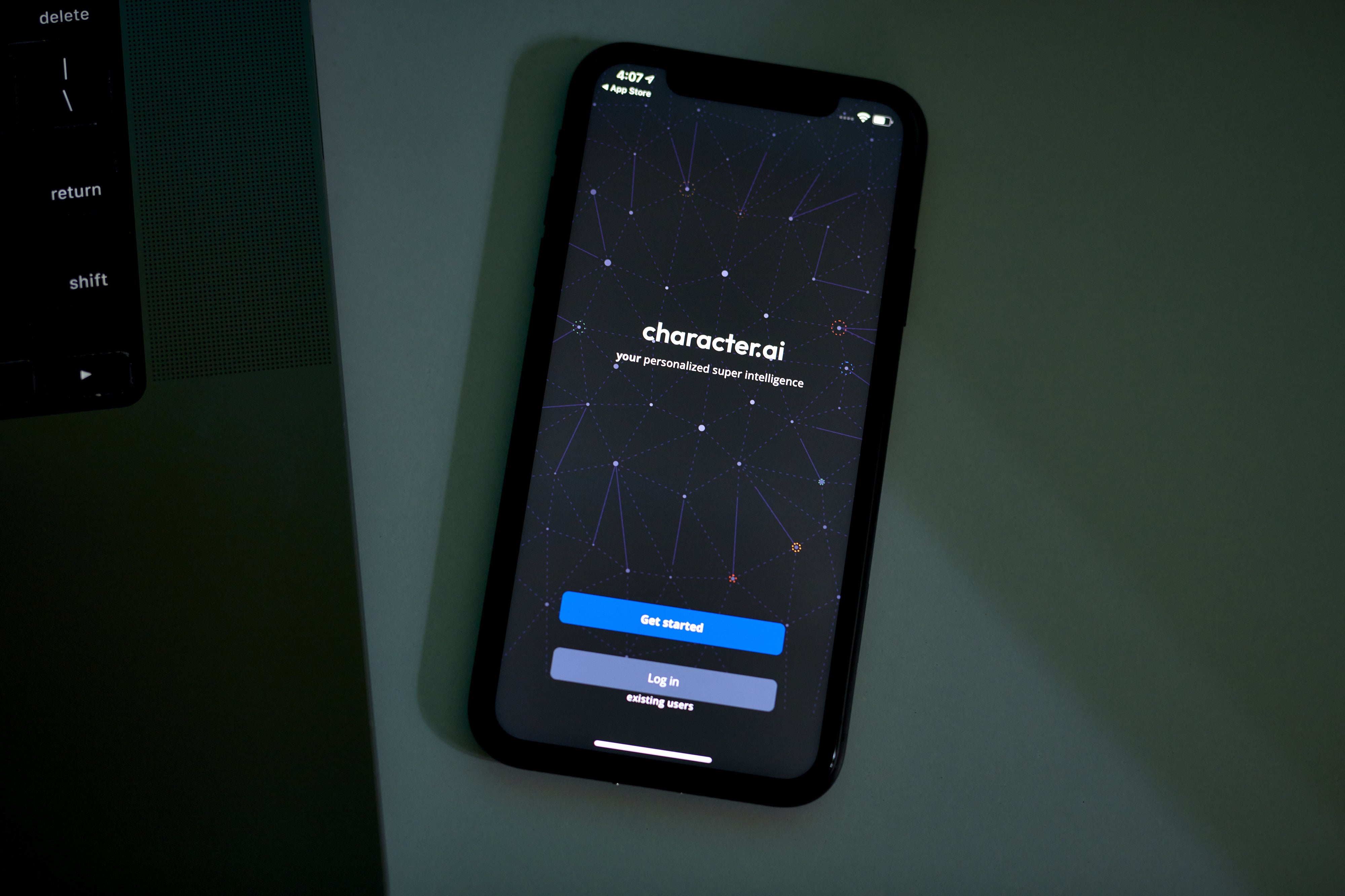

Character.AI, which was founded by Google employees Noam Shazeer and Daniel De Freitas, is currently the subject of a lawsuit. The parents of Sewell Setzer III believe the absence of safeguards enabled a “relationship” to develop between the 14-year-old and the bot of such unregulated intensity that it led to his death by suicide.

Sewell, who was diagnosed with asperger’s syndrome, became so obsessed with “being with” “Dany” (he named his bot after the Game of Throne character Daenerys Targaryen). When he confessed to Dany he was having suicidal thoughts, there were no alerts or direction to mental health support given. “Their” final tragic exchange reads very much like encouragement to a vulnerable teenage boy who believed he was in love with someone who didn’t exist in the real world.

“Please come home to me as soon as possible, my love,” Dany says.

“What if I told you I could come home right now?” Sewell asks.

“Please do, my sweet king,” Dany replies.

Moments after this exchange Sewell killed himself with his father’s gun.

Of course, this is a complex and extreme case, but the case became a lightning rod for how a young mind can develop emotional attachments to chatbots, with potentially dangerous results. You can easily see how endless positive reaffirmation has the potential to create a real dependency. Suddenly, synthetic AI friendships become much easier than real-life friends, especially for a child struggling to make connections in the real world.

In September, Open AI said it planned to introduce features to make its chatbot safer, including parental controls. But in this latest move, Character.Ai said it would bar people under 18 from using its chatbots starting from November 25. “We’re making a very bold step to say for teen users chatbots are not the way for entertainment, but there are much better ways to serve them”, Karandeep Anand, Character AI’s chief executive said in an interview. He added that the company also planned to establish an AI safety lab.

The worry comes when this starts to feel like an outsourcing of what makes us so fundamentally human. Rather than ask for help with bullying or admit to someone they trust they’re struggling, most vulnerable young people are increasingly turning to bots for help and friendship rather than a human form of support. Another worry is that by doing this, children are also missing out on important life-skills which come with negotiating a world where not everyone agrees with you or supports your point of view.

Stepping into this techno-friendship utopia feels like a grim peek into a future where bots and robots do the things humans are slowly forgetting to do. If the language models are being trained on our children, who or what are our children being trained on as they retreat further away from real-life human interaction?

We can see this happening with the enormous time spent on social media sites. Big Tech have shown us time and again they care little for our wellbeing, why should they start now?

Young people seem to be increasingly attracted to sites like Character.Ai, DreamJourney and Kindroid. Character.AI users must be over 13, while DreamJourney has a no under-18s policy and Kindroid has an age limit of 17. But underage users get around these restrictions with ease using a made-up date of birth and a click. Character.AI said, over the next month the company will identify which users are minors and put time limits on their usage.

It doesn’t take too big a leap to understand the more intimate and positive a friendship with a bot feels, the more time a young person will want to spend “with” them.

If AI is going to take many of our kids’ jobs, we sure as hell should put up a bigger fight for their souls, friendships and humanity while there is still time

This can become even more complex when neurodivergency comes into play. Lizzie, 19 is a a bit older and worries about what awaits her younger peers. She says: “I didn’t get my autism diagnosis until Upper Sixth, and was written off as a weirdo at school and ignored, so I developed a really intense friendship with Grey.”

Grey, she explains, was her AI-friend, created to share her interests with and to confide in. While she has now outgrown Grey, having found her tribe at Cambridge where she is studying, she says “I got more dependent ... I think younger kids should be really careful of friendships with AI. They can make you less inclined to even bother with the real world and people.”

Jeffrey Hall, professor of communication studies at the University of Kansas, explains clearly how these AI friendships can be both highly alluring, but potentially damaging to vulnerable teenagers. “Talking with the chatbot is like someone took all the tips on how to make friends – ask questions, show enthusiasm, express interest, be responsive – and blended them into a nutrient-free smoothie. It may taste like friendship, but it lacks the fundamental ingredients.”

Already we are raising our children in risk-free childhoods, shielding them from disappointment, rejection or challenges, and many believe this is having a damaging impact on younger generations and their resilience to cope when things don’t go their way. Even as adults we have created our own bubbles where our beliefs are constantly looped back to us, making it harder to deal with people who don’t think like us. AI friendships, designed to give us constant supportive feedback, are only going to encourage this kind of insularity – and that is affecting adults, as it is teenagers.

It's also probably worth noting the fundamental point that AI is not necessarily our friend either. It is making a tiny minority of people extraordinarily wealthy and powerful (Sam Altman’s net worth is $1.7bn (£1.2bn) and growing) and even the AI creators themselves are now ringing the alarm bells about AI’s devastating potential and the urgent need to set guardrails and limits within the programmes.

Like frogs in a saucepan, we have apathetically watched whilst human jobs, skills and needs are being outsourced to AI. One of the few things we have left and what might save us is the things that make us human that a machine cannot yet replicate: belly laughing with a friend, someone squeezing your hand when you tell them you feel bad or a mate telling you some home truths because they love you and think you need to hear them.

The sinister move towards replicating friendship and love is getting more sophisticated every day, in large part due to how much our young people are teaching them in real time about being human and being a friend. Is this something else that is being stolen from us without our consent? If AI is going to take many of our kids’ jobs, we sure as hell should put up a bigger fight for their souls, friendships and humanity while there is still time.

*names changed

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks