New AI can predict people’s time of death with high degree of accuracy, study finds

‘Clearly, our model should not be used by an insurance company,’ scientists say

A groundbreaking new ChatGPT-like artificial intelligence system trained with the life stories of over a million people is highly accurate in predicting the lives of individuals as well as their risk of early death, according to a new study.

The AI model was trained on the personal data of Denmark’s population and was shown to predict the people’s chances of dying more accurately than any existing system, scientists from the Technical University of Denmark (DTU) said.

In the study, researchers analysed the health and labour market data for 6 million Danes collected from 2008 to 2020, including information on individuals’ education, visits to doctors and hospitals, resulting diagnoses, income, and occupation.

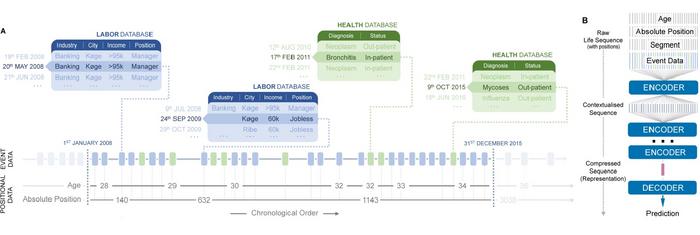

Scientists converted the dataset into words to train a large language model dubbed “life2vec” similar to the technology behind AI apps like ChatGPT.

Once the AI model learned the patterns in the data, it could outperform other advanced systems and predict outcomes such as personality and time of death with high accuracy, according to the study, published in the journal Nature Computational Science on Tuesday.

Researchers took the data on a group of people from the set aged from 35 to 65 – half of whom died between 2016 and 2020 – and asked the AI system to predict who lived and who died.

They found that its predictions were 11 per cent more accurate than that of any other existing AI model, or the method used by life insurance companies to price policies.

“What’s exciting is to consider human life as a long sequence of events, similar to how a sentence in a language consists of a series of words,” study first author Sune Lehman from DTU said.

This is usually the type of task for which transformer models in AI are used, but in our experiments, we use them to analyze what we call life sequences, i.e., events that have happened in human life,” Dr Lehman said.

Using the model, researchers sought answers to general questions like the chances of a person dying within four years.

They found that the model’s responses are consistent with existing findings such that when all other factors are considered, individuals in a leadership position or with a high income are more likely to survive, and being male, skilled, or having a mental diagnosis is associated with a higher risk of dying.

“We used the model to address the fundamental question: to what extent can we predict events in your future based on conditions and events in your past?” Dr Lehman said

“Scientifically, what is exciting for us is not so much the prediction itself, but the aspects of data that enable the model to provide such precise answers,” he added.

The model could also accurately predict the outcomes of a personality test in a section of the population better than existing AI systems.

“Our framework allows researchers to identify new potential mechanisms that impact life outcomes and associated possibilities for personalized interventions,” researchers wrote in the study.

However, scientists caution that the model should not be used by life insurance firms due to ethical concerns.

“Clearly, our model should not be used by an insurance company, because the whole idea of insurance is that, by sharing the lack of knowledge of who is going to be the unlucky person struck by some incident, or death, or losing your backpack, we can kind of share this burden,” Dr Lehman told New Scientist.

Researchers also warn that there are other ethical issues surrounding the use of life2vec such as protecting sensitive data, privacy, and the role of bias in data.

“We stress that our work is an exploration of what is possible but should only be used in real-world applications under regulations that protect the rights of individuals,” they said.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks