Google’s DeepMind unveils AI robot that can teach itself without supervision

RoboCat marks major progress towards creating general purpose robots capable of performing millions of everyday tasks

Google’s AI division DeepMind has unveiled a self-improving robot agent capable of teaching itself new tasks without human supervision.

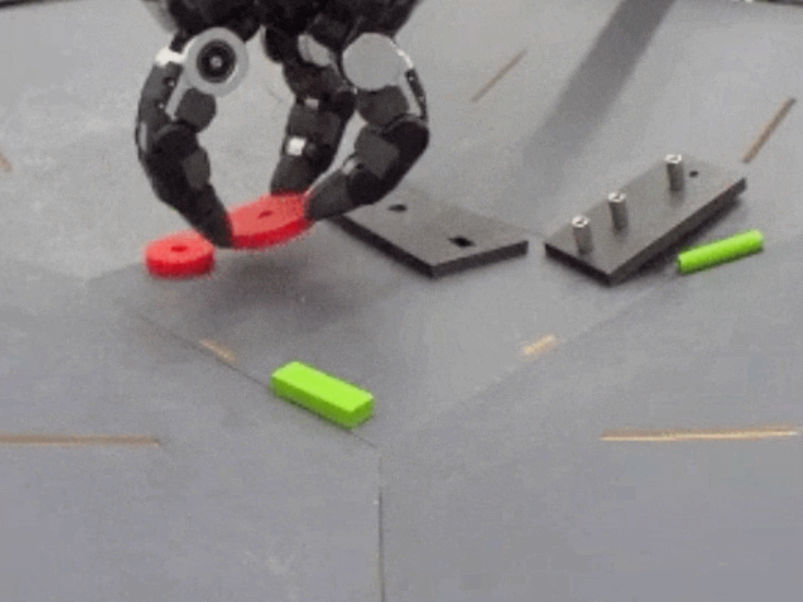

DeepMind claims that its RoboCat AI model is the first in the world to be able to learn and solve a variety of problems using various real-world robots like robotic arms.

Data generated from the robot’s actions allows the AI to improve its technique, which can then be transferred to other robotic systems.

The London-based company, which Google acquired in 2014, said the technology marks significant progress towards building general-purpose robots that can perform everyday tasks.

“RoboCat learns much faster than other state-of-the-art models,” DeepMind researchers wrote in a blog post detailing its latest artificial intelligence.

“It can pick up a new task with as few as 100 demonstrations because it draws from a large and diverse dataset. This capability will help accelerate robotics research, as it reduces the need for human-supervised training, and is an important step towards creating a general-purpose robot.”

RoboCat was inspired by DeepMind’s AI model Gato, which learns by analysing text, images and events.

Researchers trained RoboCat by showing demonstrations of a human-controlled robot arm performing tasks, such as fitting shapes through holes and picking up pieces of fruit.

RoboCat was then left to train by itself, steadily improving as it performed the task an average of 10,000 times without supervision.

The AI-powered robot trained itself to perform 253 tasks during DeepMind’s experiments, across four different types of robots. It was also able to adapt its self-improvement training to transition from a two-fingered to a three-fingered robot arm.

Further development could see the AI learn previously unseen tasks, the researchers claimed.

“RoboCat has a virtuous cycle of training: the more new tasks it learns, the better it gets at learning additional new tasks,” the blog post stated.

“These improvements were due to RoboCat’s growing breadth of experience, similar to how people develop a more diverse range of skills as they deepen their learning in a given domain.”

The research follows a growing trend of self-teaching robotic systems, which hold the promise of realising the long-envisioned sci-fi trope of domesticated robots.

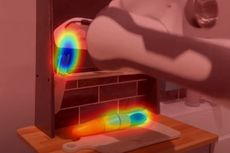

Engineers from Carnegie Mellon University announced this month that they had built a robot capable of learning new skills by watching videos of humans performing them.

The team demonstrated a robot that was able to open drawers and pick up knives in order to chop fruit, with each household task taking just 25 minutes to learn.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks