Beheaded in Philadelphia, punched in Silicon Valley and smeared with barbecue sauce in San Francisco: Why do humans hurt robots?

Jonah Engel Bromwich explores human aversion to machines

A hitchhiking robot was beheaded in Philadelphia. A security robot was punched to the ground in Silicon Valley. Another security bot, in San Francisco, was covered in a tarp and smeared with barbecue sauce.

Why do people lash out at robots, particularly those built to resemble humans? It is a global phenomenon. In a mall in Osaka, Japan, three boys beat a humanoid robot with all their strength. In Moscow, a man attacked a teaching robot named Alantim with a baseball bat, kicking it to the ground, while the robot pleaded for help.

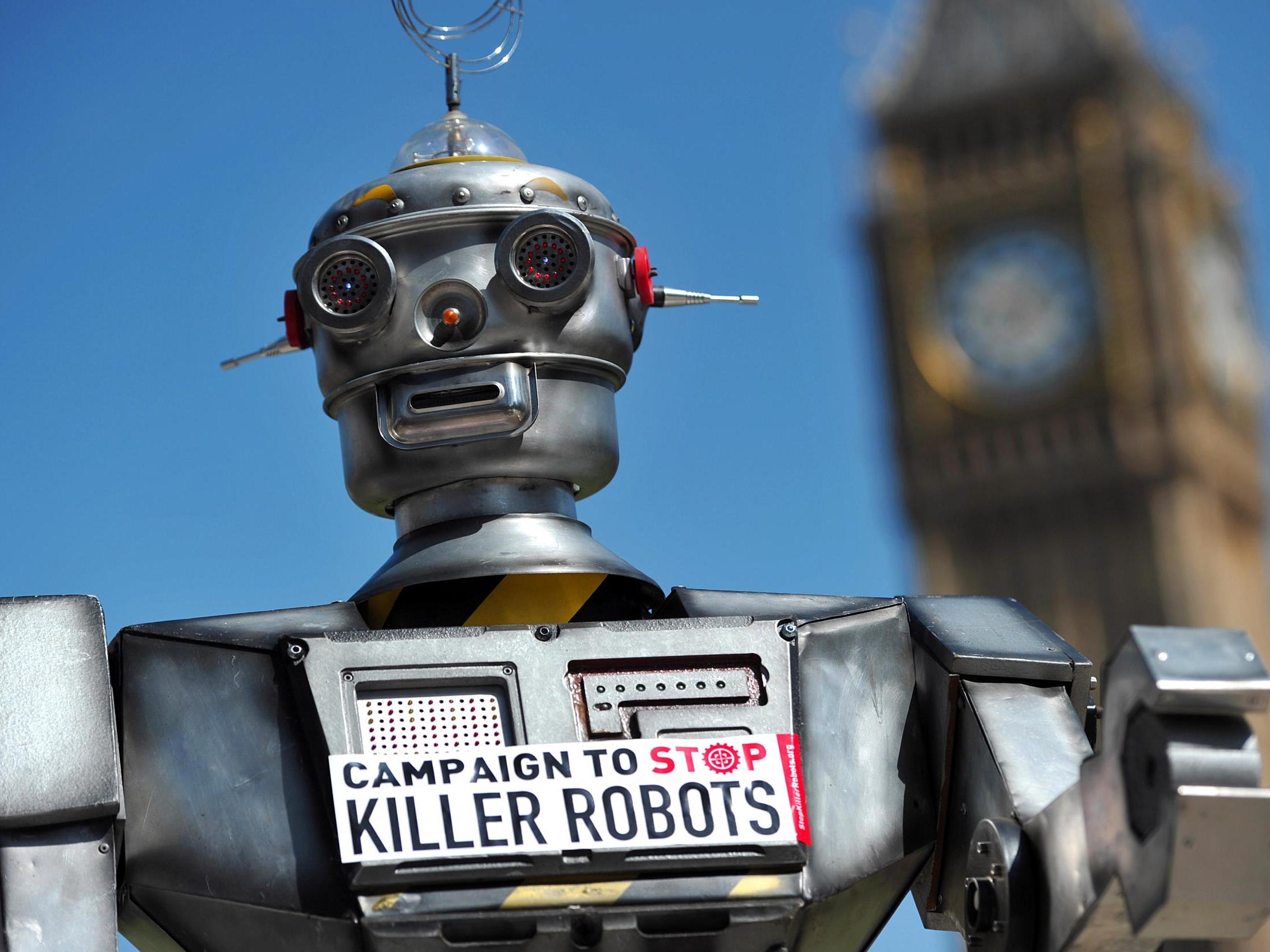

Why do we act this way? Are we secretly terrified that robots will take our jobs? Upend our societies? Control our every move with their ever-expanding capabilities and air of quiet malice?

Quite possibly. The spectre of insurrection is embedded in the word “robot” itself. It was first used to refer to automatons by the Czech playwright, Karel Capek, who repurposed a word that had referred to a system of indentured servitude or serfdom. The feudal fear of peasant revolt was transplanted to mechanical servants and worries of a robot uprising have lingered ever since.

Comedian Aristotle Georgeson has found that videos of people physically aggressing robots are among the most popular he posts on Instagram under the pseudonym Blake Webber. And much of the feedback he gets tends to reflect the fear of robot uprisings.

Georgeson said some commenters approve of the robot beatings, “saying we should be doing this so they can never rise up. But there’s this whole other group that says we shouldn’t be doing this because when they” — the robots — “see these videos they’re going to be pissed”.

But Agnieszka Wykowska, a cognitive neuroscientist and editor-in-chief of the International Journal of Social Robotics, said that while human antagonism toward robots has different forms and motivations, it often resembles the ways humans hurt each other. Robot abuse, she said, might stem from the tribal psychology of insiders and outsiders.

“You have an agent, the robot, that is in a different category than humans,” she said. “So you probably very easily engage in this psychological mechanism of social ostracism because it’s an out-group member. That’s something to discuss: the dehumanisation of robots even though they’re not humans.”

Paradoxically, our tendency to dehumanise robots comes from the instinct to anthropomorphize them. William Santana Li, chief executive of Knightscope, the largest provider of security robots in the United States (two of which were battered in San Francisco), said that while he avoids treating his products as if they were sentient beings, his clients seem unable to help themselves.

“Our clients, a significant majority, end up naming the machines themselves,” he said. “There’s Holmes and Watson, there’s Rosie, there’s Steve, there’s CB2, there’s CX3PO.”

Wykowska said that cruelty that results from this anthropomorphising might reflect “Frankenstein syndrome,” because “we are afraid of this thing that we don’t really fully understand, because it’s a little bit similar to us, but not quite enough.”

In his paper “Who Is Afraid of the Humanoid?” Frédéric Kaplan, digital humanities chair at École Polytechnique Fédérale de Lausanne in Switzerland, suggested Westerners have been taught to see themselves as biologically informed machines — and perhaps, are unable to separate the idea of humanity from a vision of machines. The nervous system could only be understood after the discovery of electricity, he wrote. DNA is necessarily explained as an analog to computer code. And the human heart is often understood as a mechanical pump. At every turn, Kaplan wrote, “we see ourselves in the mirror of the machines that we can build.”

This does not explain human destruction of less humanoid machines. Dozens of vigilantes have thrown rocks at driverless cars in Arizona, for example, and incident reports from San Francisco suggest human drivers are intentionally crashing into driverless cars. These robot altercations may have more to do with fear of unemployment, or with vengeance: A paper published last year by economists at the Massachusetts Institute of Technology and Boston University suggested that each robot added to a discreet zone of economic activity “reduces employment by about six workers.” Blue-collar occupations were particularly hit hard. And a self-driving car killed a woman in Tempe, Arizona, in March, which at least one man, brandishing a rifle, cited as the reason for his dislike of the machines.

Abuse of humanoid robots can be disturbing and expensive, but there may be a solution, said Wykowska, the neuroscientist. She described a colleague in the field of social robotics telling a story recently about robots being introduced to a kindergarten class. He said that “kids have this tendency of being very brutal to the robot, they would kick the robot, they would be cruel to it, they would be really not nice,” she recalled.

“That went on until the point that the caregiver started giving names to the robots. So the robots suddenly were not just robots but Andy, Joe and Sally. At that moment, the brutal behavior stopped. So, it’s very interesting because again it’s sort of like giving a name to the robot immediately puts it a little closer to the in-group.”

Li shared a similar experience, when asked about the poor treatment that had befallen some of Knightscope’s security robots.

“The easiest thing for us to do is when we go to a new place, the first day, before we even unload the machine, is a town hall, a lunch-and-learn,” he said. “Come meet the robot, have some cake, some naming contest and have a rational conversation about what the machine does and doesn’t do. And after you do that, all is good. 100 per cent.”

And if you don’t?

“If you don’t do that,” he said, “you get an outrage.”

The New York Times

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments