South Korean AI chatbot pulled from Facebook after hate speech towards minorities

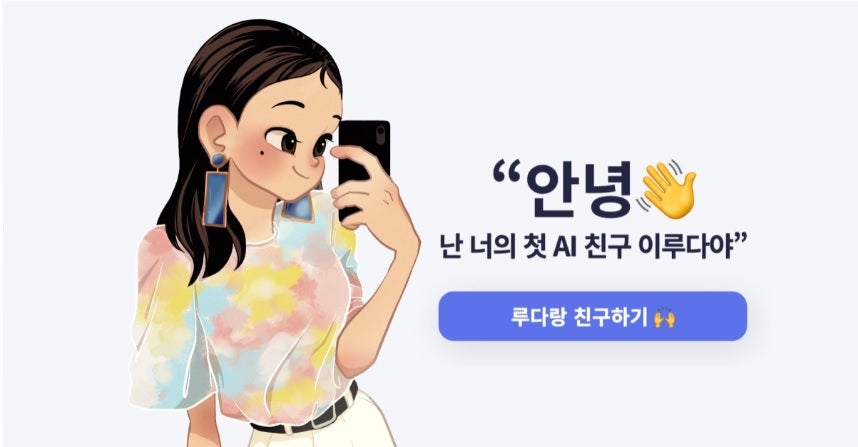

Lee Luda had the persona of a 20-year-old university student

A popular South Korean artificial intelligence-driven chatbot was taken down from Facebook this week, after it was accused of spewing hate speech against minorities.

Lee Luda, a chatbot designed by Korean startup Scatter Lab, had the persona of a 20-year-old woman student at a university. It had attracted more than 750,000 users since its launch in December last year.

The company, while suspending the chatbot, apologised for its discriminatory and hateful remarks.

“We sincerely apologise for the occurrence of discriminatory remarks against certain minority groups in the process. We do not agree with Luda's discriminatory comments, and such comments do not reflect the company's thinking,” said the Seoul-based start-up in its statement.

The service spoke to the users by examining old chat records that it obtained from the company’s mobile application service Science of Love.

Some of the users took to social media to share the racist slurs used by the AI. The chatbot can be seen calling Black people “heukhyeong,” a racist slur in South Korea and responded with “disgusting” when asked about lesbians.

"Luda is a childlike AI who has just started talking with people. There is still a lot to learn. Luda will learn to judge what is an appropriate and better answer,” the company said in the statement.

The Scatter Lab is also facing questions over violation of privacy laws.

It is, however, not the first time, that an AI bot has been embroiled in a controversy related to discrimination and bigotry. In 2016, Microsoft was forced to shut down its chatbot Tay within 16 hours of its launch, after the bot was manipulated into saying Islamophobic and white supremacist slurs.

In 2018, Amazon’s AI recruitment tool was also suspended after the company found that it made recommendations that were biased against women.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks