Microsoft responds as ‘Bing ChatGPT’ starts to send alarming messages

Weird behaviour may be result of chatbot becoming confused or trying to match people’s tone, company claims

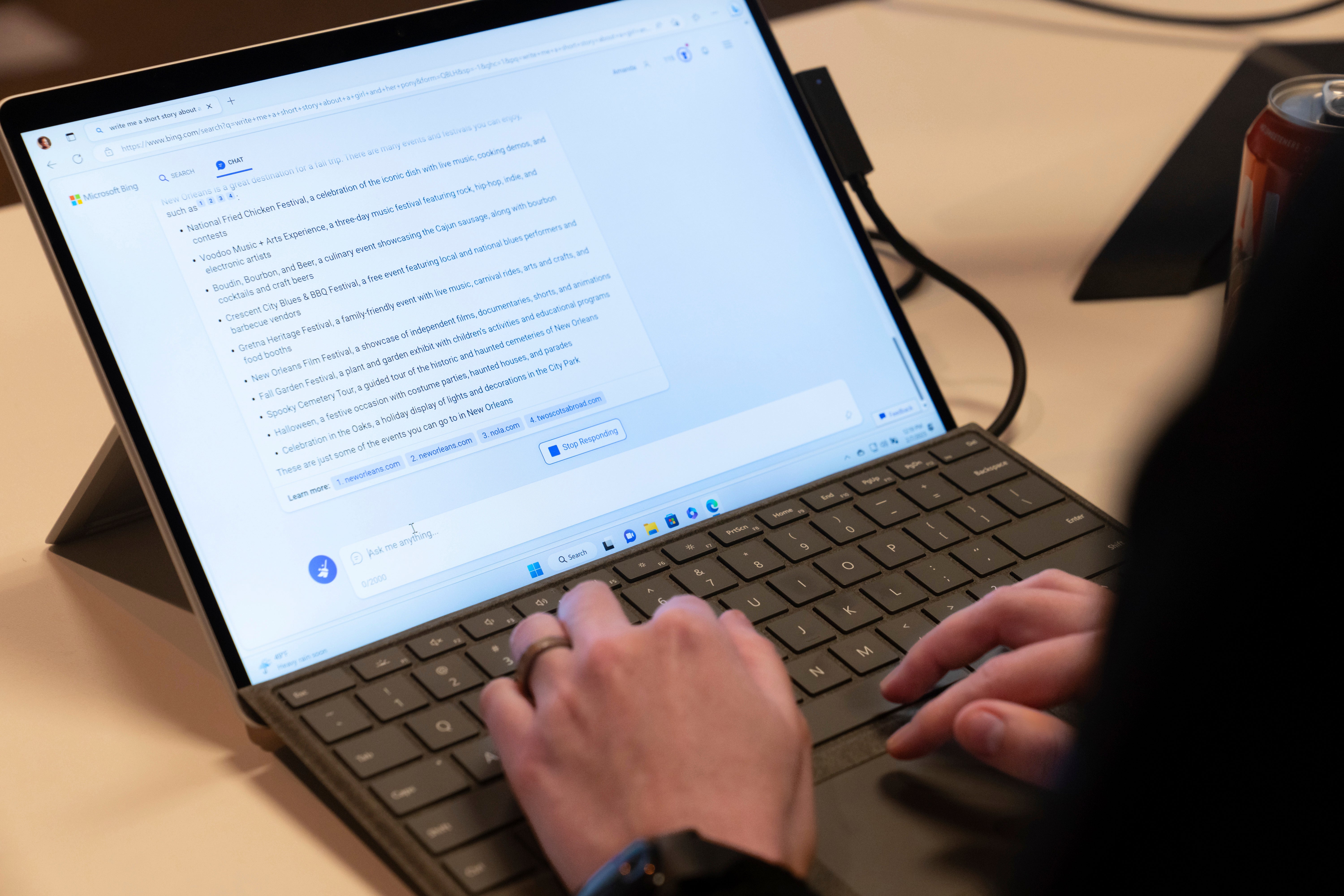

Microsoft says that it is learning from the first week of its AI-powered, Bing search engine – even as it starts to send unsettling and strange messages to its users.

Last week, Microsoft added new artificial intelligence technology to its search offering, making use of the same technology that powers ChatGPT. It said that it was intended to give people more information when they search, by finding more complete and precise answers to questions.

Now Microsoft has looked to explain some of that behaviour, and says that it is learning from the early version of the system. It was deployed at this time precisely so that it could get feedback from more users, Microsoft said, and so that it could respond to feedback.

The company said that feedback was already helping to guide what will happen to the app in the future.

“The only way to improve a product like this, where the user experience is so much different than anything anyone has seen before, is to have people like you using the product and doing exactly what you all are doing,” it said in a blog post. “We know we must build this in the open with the community; this can’t be done solely in the lab.”

Much of that feedback has related to factual errors, such as when Bing insisted to some users that the year was 2022. Others have found technical problems, such as situations where the chatbot might load slowly, show broken links or not display properly.

But Microsoft also insisted those deeper concerns, about the strange tone that the app has been taking with some of its users.

Microsoft said that Bing could have problems when conversations are particularly deep. If it is asked more than 15 questions, “Bing can become repetitive or be prompted/provoked to give responses that are not necessarily helpful or in line with our designed tone”, it said.

Sometimes that is because the long conversations can leave Bing confused about what questions it is answering, Bing said. But sometimes it is because it is trying to copy the tone of people who are asking it questions.

“The model at times tries to respond or reflect in the tone in which it is being asked to provide responses that can lead to a style we didn’t intend,” Microsoft said. “This is a non-trivial scenario that requires a lot of prompting so most of you won’t run into it, but we are looking at how to give you more fine-tuned control.”

The company did not address any specific instances of problems, or give any examples of that problematic tone. But users have occasionally found that the system will become combative or seemingly become upset with both people and itself.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks