Programming robots to make mistakes could help us bond with them better, research finds

Robot experts at the University of Lincoln found that humans get along much better with robots who have human-like flaws

The phenomenon of the 'uncanny valley' - the uncomfortable feeling that we get when confronted with an eerily realistic human-like robot - has long been a difficult challenge for robot designers to overcome.

We've all heard that the robot revolution is round the corner, but who would have these things in their home if they're creeped out by their dead expressions and unnatural movements?

Researchers from Lincoln University may have found a way to prevent this feeling of revulsion - by programming humanoid robots to make mistakes and act a little more human.

Interactive or 'companion' robots are increasingly being used to help the elderly, or support children suffering from mental and learning difficulties.

However, the research suggested that by programming these odd machines to be perfectly functional all the time, we could actually be making comfortable human-robot relations much more difficult to achieve.

Robotics experts at the university found that a person is much more likely to warm to one of these robots if it shows human-like traits - such as making mistakes, showing strong emotions, or displaying the flaws in judgement that make up our personalities.

It seems counter-intuitive, but by deliberately programming these robots to make mistakes, they might actually be able to do their job better.

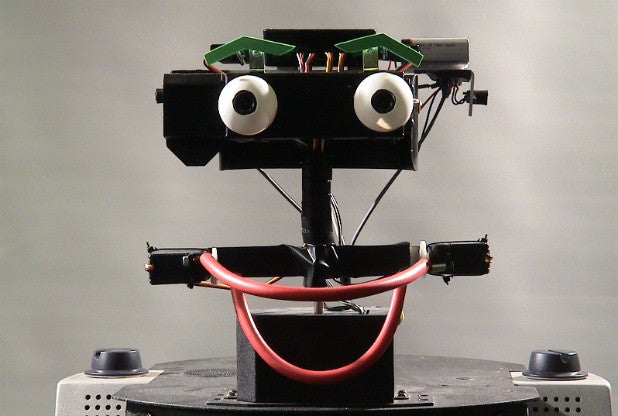

The investigation, conducted by PhD researcher Mriganka Biswas and overseen by Dr John Murray from Lincoln's School of Computer Science, involved the use of Dr Murray's ERWIN robot, which can express five basic emotions, and a Keepon robot, which is used to study social development by interacting with children.

Human participants in the test were asked to interact with the robots. In half of the interactions, the robots performed like robots - in line with their programming, without errors.

In the other half, the robots were made to make small errors, like misremembering simple facts and showing extremes of emotion.

Rating their interactions afterwards, almost all of the partipants had a warmer and more meaningful experience with the robots when they were making mistakes.

"The cognitive biases we introduced led to a more humanlike interaction process," Biswas explained.

"We overwhelmingly found that participants paid attention for longer and actually enjoyed the fact that a robot could make common mistakes, forget facts and express more extreme emotions, just as humans can."

He added: "The human perception of robots is often affected by science fiction. However, there is a very real conflict between this perception of superior and distant robots, and the aim of human-robot interaction researchers."

"A companion robot needs to be friendly and have the ability to recognise users’ emotions and needs, and act accordingly. Despite this, robots used in previous research have lacked human characteristics so that users cannot relate - how can we interact with something that is more perfect than we are?"

“As long as a robot can show imperfections which are similar to those of humans during their interactions, we are confident that long-term human-robot relations can be developed.”

The promising results of the test pave the way for future research, which will involve even more human-like machines. The forthcoming studies will see if mistake-making robots which can also speak and display body language like humans can create even closer bonds.

According to some predictions, robots will take our jobs, destroy our sex lives, and possibly even kill us all. But if they make some human foibles and forget things in the process, we might warm to them a little more.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks