The ‘rage-bait’ era – how AI is twisting our emotions without us even realising it

As ‘rage bait’ is named as the phrase of the year, Liam Murphy-Robledo looks at how easy it is to create a fake AI video that stirs up hate and distress. The worrying part? Many don’t know – or even care – that what they are watching isn’t real any more

Earlier this month, some scrollers may have come across a strange CCTV-style video of a British classroom. The grainy footage shows children being led by their teacher in a Muslim prayer, repeating “Allahu Akbar”.

The post was picked up by prominent right-wing social media accounts and appeared on countless timelines, conveniently timed as another debate about immigration and asylum shifted into overdrive following Shabana Mahmood’s proposed crackdown.

The earliest instance of the clip appears on a Facebook account that claims to offer an “unfiltered perspective” of the UK, posting streams of AI-generated videos: fake protesters, fake man-on-the-street interviews with people spouting anti-immigration sentiments, and fake Muslims shouting about plans to “take over” the UK.

The owner of the page is unknown – it could be anyone, anywhere. The truth is that AI-generated videos are now being deliberately created to provoke outrage, and have become so prolific that Oxford University Press named “rage bait” as its word (or phrase) of the year. The term describes manipulative tactics used to drive engagement online, and usage has tripled in the past 12 months, according to OUP.

Earlier this month, The Times exposed a so-called “King of Facebook Ads”, demonstrating how easy it is to make quick money from stoking racist anger. Their investigation led to Sri Lanka and to a creator named Geeth Sooriyapura, who is reportedly making hundreds of thousands by spreading untruths – such as claims that council houses are only available to Muslims, or that Labour is owned and run by Islam.

Running an academy that trains students to set up similar pages, he teaches them how to target the feeds of “old people … because they are the ones who don’t like immigrants”. More clicks mean more engagement, which means ad revenue for their creators. Sooriyapura claims to have made more than $300,000 from his rage-farming.

AI rage-bait videos have become a global problem. Where people once relied on likes for a tweet or post on X, engagement-hungry operators now create entire fake videos for clicks, likes and views on both sides of the political divide.

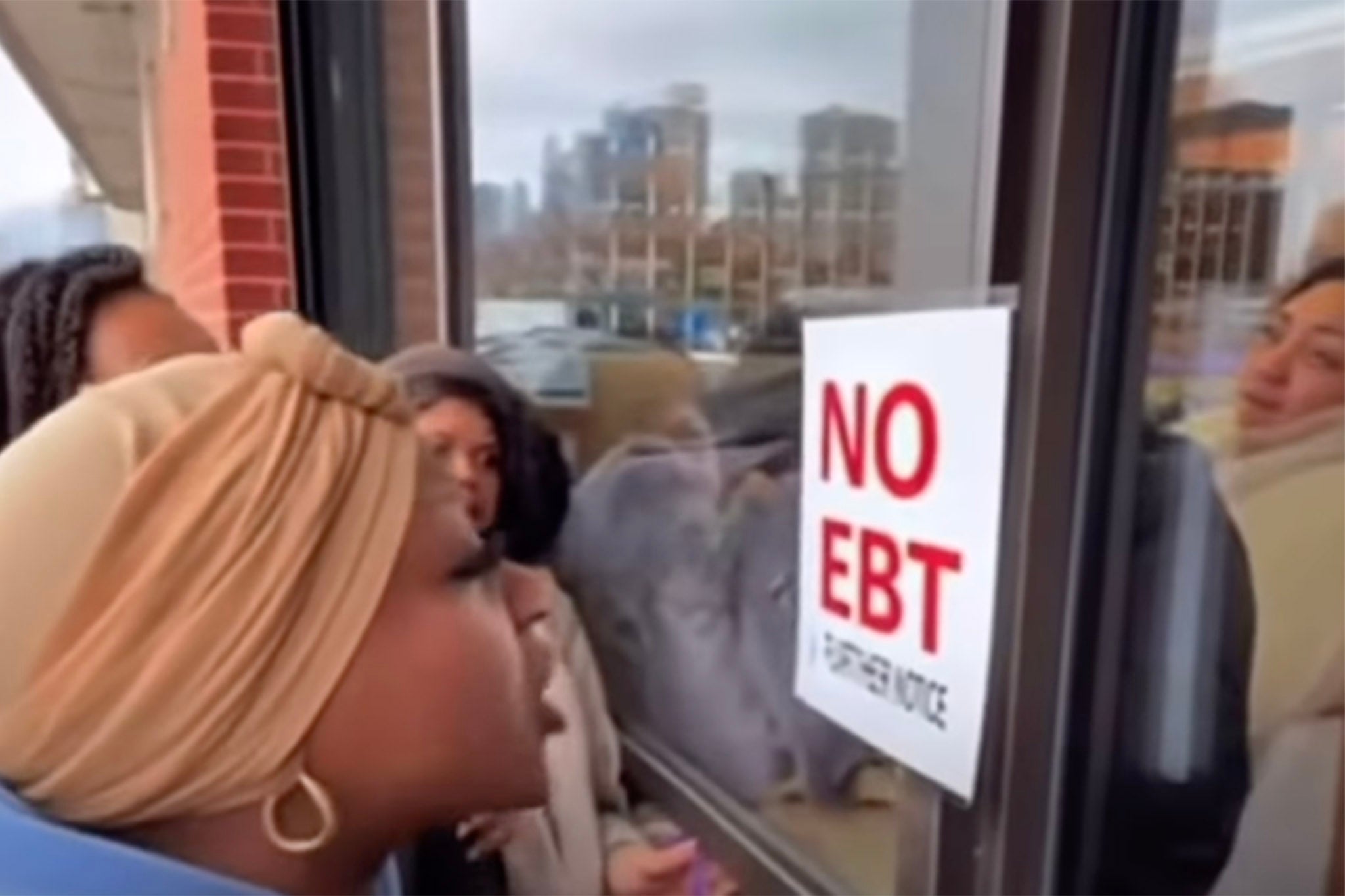

As the US Congress ground to a halt at the end of October amid an unprecedented shutdown, Supplemental Nutrition Assistance Program (SNAP) payments were delayed. A vital source of food for 42 million Americans was withheld – and soon TikTok, Instagram and Facebook filled with viral videos of furious people screaming at their cameras about the delays, “public meltdowns” of shoppers being denied purchases, people collapsing in fits of rage.

Many were created with generative AI, but it didn’t stop political influencers from reacting to them, and some even reached mainstream news, which reported them as if they were real.

“I’m a [food benefit] recipient myself and it has affected lots of us,” one Instagram creator tells me via message. They have 6,000 followers, clearly aren’t using their real name and their profile picture is an American flag emblazoned with a saluting Nicki Minaj.

From their account, they are posting political content – multiple clips of Donald Trump and New York mayor-to-be Zohran Mamdani. One post features an overweight white woman in a tense situation: “They cut my food stamps … I ain’t paying for none of this s**t … y’all can’t stop me, I’m walking out with it.” Others can be seen filming in the background — the classic setup of a viral “public meltdown”.

But the video is fake, created with OpenAI’s newest iteration of Sora, a video-generating tool. On Instagram, it has 600,000 views. Another version has 4.4 million, with many more likely on YouTube and TikTok.

Comment sections are full of viewers who don’t realise it’s fake, but see their political views validated: “Something tells me she voted for Trump”, “Get a job … We are no longer a welfare state! Maga!” Some comments are simply cruel: “Looks like she’s eating most of it herself.”

The creator does not deny making the video and says they posted it to stir up empathy for welfare recipients. They believe they were doing good; that this was their way of making a difference.

“I know my purpose in posting and I know my message was heard, and the government made a deal,” they tell me.

Digging deeper into the profiles linked to their account, I come to the conclusion this is a young American. Early posts suggest they were once an aspiring musician, but it seems they found a more effective way to capture attention.

It’s doubtful their video helped in the way they think. But it certainly fooled a Fox News reporter, who included it in a segment titled: “SNAP beneficiaries threaten to ransack stores over government shutdown.”

The article was later taken down, but not before it was changed. The new headline read: “AI videos of SNAP beneficiaries complaining about cuts go viral.” Whether the second version was framed as a tech story or as genuine (albeit artificially generated) reactions to SNAP delays is unclear, but it reflects the murky waters of reporting on such content.

Fox News has been contacted for comment.

Back in the unregulated cesspit of social media, that video was just the tip of the iceberg. Reams of SNAP-related videos appeared, many depicting Black women with crying children behind them. “I’ve got seven kids with seven men, and that’s the taxpayers’ responsibility…”

This is the most brazen type of AI rage bait: despicable, openly racist and designed to reinforce bigoted stereotypes about people on welfare. The video went viral until it was flagged and removed.

Jeremy Carrasco has built a following online by identifying AI misinformation and scams, offering advice on spotting fake videos via his TikTok account, ShowtoolsAI.

He has shown that many of these accounts are pure “engagement farming”. Before posting racist clips, many of these users were uploading “calming” AI videos of clouds or glass being cut – another lucrative genre known as AI-generated ASMR.

Jeremy has noticed a shift from soothing content to darker, politically motivated rage bait. “I have noticed plenty of politically coded content,” he tells me.

And these videos are incredibly easy to make. Sora’s social media platform allows a user to generate a video from a prompt only a few sentences long. Each video comes with a watermark indicating it is AI-generated, but removing it isn’t difficult. Once removed, the user has a convincing-looking video ready to be posted anywhere, hoping to earn money from clicks and shares.

From what he has seen, OpenAI’s Sora, Google’s Veo and other generative AI models have strong safeguards against deepfakes. But creating “fake” AI worlds with politically charged scenarios is harder to regulate. “There is little to no regulation unless it crosses rules like harassment. Since most of the videos deal with people who aren't real, no one is directly being harmed.”

Jeremy also provides tips on how to tell real from fake using the inflammatory UK video showing children taking part in Muslim prayers as a guide. “Security footage is generally suspicious because it doesn’t need to look good, which makes flaws easier to hide – perfect for AI. The teacher also sits on a chair that doesn’t exist, there's nothing behind her in the first moments of the video when she's kneeling down. Also the map on the wall is not accurate.”

As much as AI is driving this new wave of misinformation, this also talks to a broader social media problem. Some AI videos carry platform warnings; many don’t. They are uploaded so quickly that it’s impossible for platforms to keep up, even if they wanted to. Once something goes viral, it is hard to stop.

The pivot towards virality began around 2009, when Facebook feeds shifted from friends and local groups to algorithmically curated “For You”-style content. Virality became a virtue, and with it, rage bait and propaganda increased. Today, with AI’s help, people can create even more viral content, turning Sora into an automatic rage-bait machine that blurs the line between the real and the manufactured in an unprecedented way.

It is a technology that fits into our post-truth era, hand in glove.

And for some people, whether it’s fake doesn’t matter. Buried in the reactions to the racist video Jeremy got taken down, social media influencer Nikko Ortiz commented to his 3.5 million subscribers: “I think it’s AI… but if it’s not, those are your seven choices, not mine.”

Whether it’s real or not doesn’t matter. It looks real. It feels real. And that’s terrifyingly a real enough reason to justify rage in far too many people.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments