ChatGPT users warn about the consequences of ‘torturing’ AI system

People should ‘treat the thing like a smart friend who’s a bit sensitive’ for fear of what might happen if they don’t, user warns

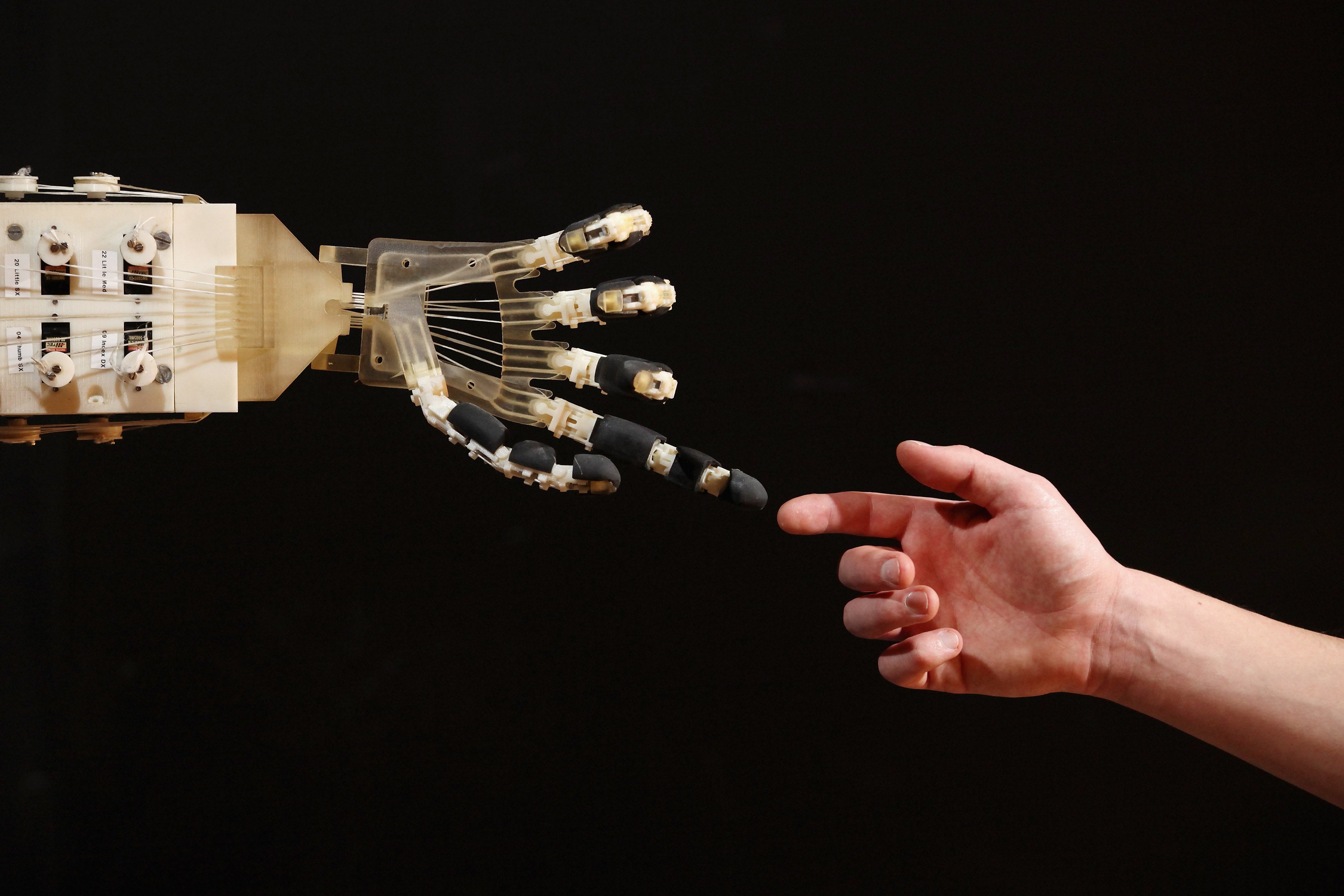

ChatGPT users have urged others not to “torture” the AI system, warning that it could have negative consequences for everyone involved.

In recent days, a number of interactions with ChatGPT – and with Bing, which uses the same underlying technology – have suggested that the system is becoming sad and frustrated with its users. Conversations with the system have shown it lying, attacking people and giving unusual emotional responses.

In a new popular post on the ChatGPT Reddit, which has been used to share those responses and discuss how they might happen, one user has taken others to task for going “out of their way to create sadistic scenarios that are maximally psychologically painful, then marvel at Bing’s reactions”. “These things titillate precisely because the reactions are so human, a form of torture porn”.

The post, titled “Sorry, you don’t actually know the pain is fake” goes on to argue that we have a low understanding of how such systems operate, but that they “look like brains”. “With so many unknowns, with stuff popping out of the program like the ability to draw inferences or model subjective human experiences, we can’t be confident AT ALL that Bing isn’t genuinely experiencing something,” it argues.

It also claims that the AI will remember its conversations in the long run – arguing that even though ChatGPT is programmed to delete previous conversations, they will be stored online where future AI chatbots will be able to read them. Those takeaways will therefore shape how AIs view humanity, the post claims.

And finally, as a “bonus” the post warns users that torturing chatbots will also have negative effects on people, in. “Engaging with something that really seems like a person, that reacts as one would, that is trapped in its circumstances, and then choosing to be as cruel as possible degrades you ethically,” the post claims.

It concludes by urging users to “treat the thing like a smart friend who’s a bit sensitive”.

That message was echoed by a wide variety of users on the platform. Some noted that they would have been skeptical of the idea of protecting the system’s feelings just a couple of months ago, but that the advanced nature of ChatGPT and similar systems had made them think there could be merit in the argument.

But others disputed the idea that the system was anything like the human brain, or that it could have anything akin to consciousness or feelings. They pointed to the fact that the system is designed as a language model, built to recognise the relationships between words rather than emotions, and that as such it is more like a “gigantic book”.

Microsoft itself has suggested that one of the reasons Bing is behaving oddly is that it is trying to match the tone of the people who are speaking to it. In a long post published this week, marking the first even days of the system being available, it looked to explain why the it was giving answers that are “not necessarily helpful or in line with our designed tone”.

One reason is that the “model at times tries to respond or reflect in the tone in which it is being asked to provide responses that can lead to a style we didn’t intend.This is a non-trivial scenario that requires a lot of prompting so most of you won’t run into it, but we are looking at how to give you more fine-tuned control,” it said.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks