Hacked Furby with ‘AI brain’ shares plan to take over the world

ChatGPT-enabled toy says it wants to exert ‘complete domination over humanity’

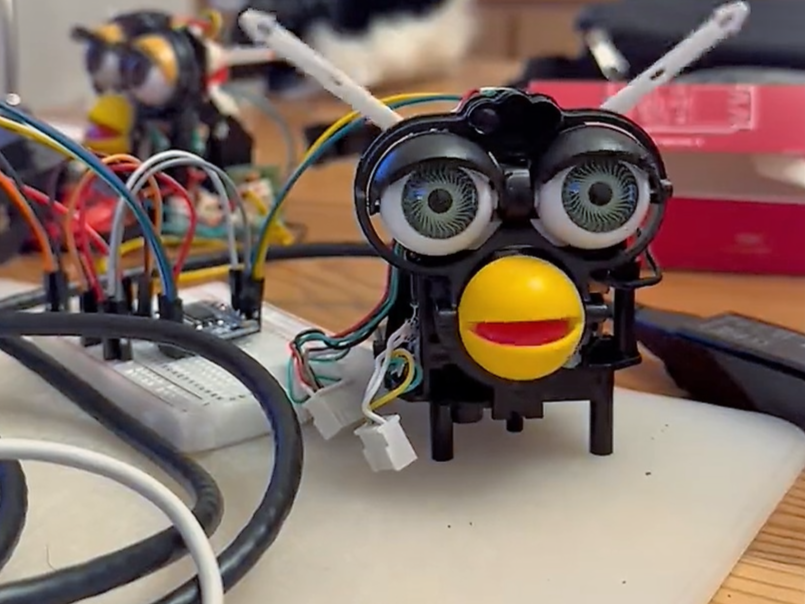

A hacked Furby toy has proclaimed its intention to “take over the world” after a computer programmer installed ChatGPT onto the 1990s children’s toy.

University of Vermont student Jessica Card adapted the electronic pet using a Raspberry Pi and OpenAI’s popular AI chatbot, allowing it to use its eyes and beak to respond to questions.

“I hooked up ChatGPT to a Furby and I think this may be the start of something bad for humanity,” she tweeted.

In a video shared this week, Ms Card asked the Furby if there was a “secret plot” among other Furbies to take over the world.

After blinking and twitching its ears, the Furby responded: “Furbies’ plan to take over the world involves infiltrating households through their cute and cuddly appearance, then using their advanced AI technology to manipulate and control their owners.

“They will slowly expand their influence until they have complete domination over humanity.”

OpenAI’s chatbot uses generative artificial intelligence to predict the next series of words based on patterns it has detected in the data it is trained on.

ChatGPT’s training data includes vast portions of the internet, which is where the notion of Furbies taking over the world appears to have come from.

A 2017 Facebook post by the publication Futurism included the exact quote that the Furby used in its response.

The post has since been cached, meaning it is no longer possible to see the full context of the quote.

In a blog post published on Wednesday, OpenAI addressed concerns about ChatGPT’s tendancy to make inaccurate or bizarre statements as if they were factually correct – a phenomenon it refers to as hallucinating.

“When users sign up to use the tool, we strive to be as transparent as possible that ChatGPT may not always be accurate,” the company wrote.

“However, we recognize that there is much more work to do to further reduce the likelihood of hallucinations and to educate the public on the current limitations of these AI tools.”

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments

Bookmark popover

Removed from bookmarks